Readings for 9/26/18

Readings for next week are now posted. Although the readings are not ordered, the order listed is probably the best one.

Make sure your respond with your comments by 9/25 at noon.

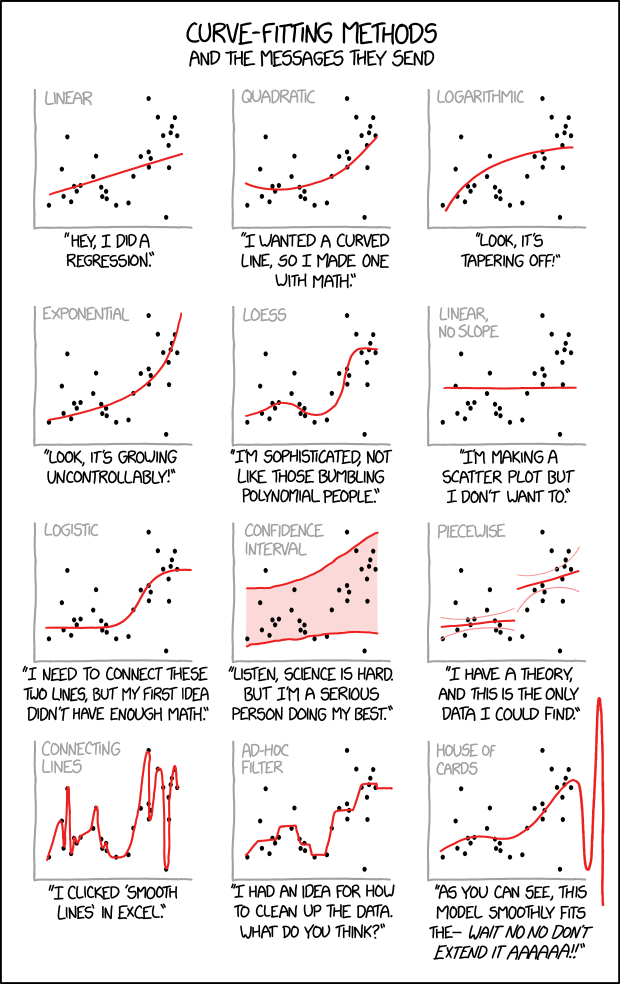

A few "goodies" follow: a humorous cartoon about curve fitting, and an example of boosting.

The following example of boosting is taken from here. I've removed the code and some of the math below to make it easier to follow.

1. Start with some data. We use the normal distribution.

2. Fit an initial model. Here we fit a line. You could view this as an intercept-only line or a line with a slope of zero. In either case, it's not hard to see that the residuals are balanced above and below the line, which is what line fitting is supposed to do.

3. Fit a model on the residuals from step 2 (step 2 residuals). This time we use a decision stump, which is a decision tree with one branch point. This is the stump we fit:

As you can see, any x (the horizontal axis) less than about 0 will now go to -.33 and everything else will go to .12. We can plot the prediction of this model:

The predictions are a "stair step" with two levels, one for each branch of the tree. If you look at the y axis, you can verify that the steps are about -.33 and .12. It's important to recognize that you're looking at predictions on step 2 residuals, because this first decision stump model was built on those residuals. Don't get confused by the fact that the data has the same shape - that is only because our first model was a line and shifted all the residuals by a constant amount (the intercept, about .5).

What have we accomplished by building a model on the step 2 residuals? Remember our goal is to drive the residuals (the error) to zero. If we subtract the residuals from our decision stump model (step 3 residuals) from the step 2 residuals, we can see that we are getting closer to zero:

4. So let's keep going: build a new decision stump model on the step 3 residuals. Here's the stump:

And it's predictions:

And we can subtract these residuals (step 4 residuals) from the step 3 residuals to see if we're getting closer to zero:

Make sure your respond with your comments by 9/25 at noon.

A few "goodies" follow: a humorous cartoon about curve fitting, and an example of boosting.

The following example of boosting is taken from here. I've removed the code and some of the math below to make it easier to follow.

1. Start with some data. We use the normal distribution.

2. Fit an initial model. Here we fit a line. You could view this as an intercept-only line or a line with a slope of zero. In either case, it's not hard to see that the residuals are balanced above and below the line, which is what line fitting is supposed to do.

3. Fit a model on the residuals from step 2 (step 2 residuals). This time we use a decision stump, which is a decision tree with one branch point. This is the stump we fit:

As you can see, any x (the horizontal axis) less than about 0 will now go to -.33 and everything else will go to .12. We can plot the prediction of this model:

The predictions are a "stair step" with two levels, one for each branch of the tree. If you look at the y axis, you can verify that the steps are about -.33 and .12. It's important to recognize that you're looking at predictions on step 2 residuals, because this first decision stump model was built on those residuals. Don't get confused by the fact that the data has the same shape - that is only because our first model was a line and shifted all the residuals by a constant amount (the intercept, about .5).

What have we accomplished by building a model on the step 2 residuals? Remember our goal is to drive the residuals (the error) to zero. If we subtract the residuals from our decision stump model (step 3 residuals) from the step 2 residuals, we can see that we are getting closer to zero:

4. So let's keep going: build a new decision stump model on the step 3 residuals. Here's the stump:

And it's predictions:

And we can subtract these residuals (step 4 residuals) from the step 3 residuals to see if we're getting closer to zero:

5. One last time:

Stump

Predictions

Residuals

6. Adding it up. Previously we showed that the difference in residuals between successive models was going to zero. That means at each step, we are successively capturing a small piece of error from the previous model. When we hit zero, we know that we've modeled all the error in our chain of models. What do we do with it? Recall that the error is just the difference between our predictions and the actual data point. What if we added those differences together? Then even though our initial model was bad, each successive model will take us just a little bit closer to the actual data. All we have to do is sum the predictions from all of our models together to get the "final" prediction.

I made a hypothetical gif of what it might look like if we kept going. The idea is that we're approximating the curve using lots of horizontal line segments. If you haven't taken calculus, this is one of the key ideas.

ReplyDeleteMachine Learning & A.I.- CCCS #34

I liked how this video broke down machine learning into very basic concepts. It is a great introductory video for machine learning. The Artificial Neural Networks example was a great visual to understand how deep learning takes place. The examples at the end of the video of how we are approaching strong AI but are still not capable of human-like intelligence were very interesting.

Model Tuning and the Bias-Variance Tradeoff

This website gave an excellent explanation of the bias-variance tradeoff (small vs. overly complex trees). I guess my question here is how do you know when you have arrived at the best model? Is it more of a trial and error approach or is there a way to know it is the best model?

Deitz 2016 How Neurological Measures Work in Advertising

It’s amazing how today we can use EEG and other neuroscience and physiological measurements to measure things we may not even realize we are doing in response to advertisements. The amount of money spent on Super Bowl ads every year, $2 billion according to the article, makes me wonder how many companies can justify this cost on their balance sheet. Does the cost of one super bowl ad get made up due to sales from that super bowl ad? I think as this technology progresses and we are able to understand more of the effects that advertisements have on consumer responses, we will need to make sure that these types of technologies are not misused by companies to the detriment of the consumer. If used ethically and effectively, I do believe this type of measurement will make a huge impact on the types of advertisements companies create to represent their brands.

Deitz 2017 Role of Narrativity and Prosodic Features in Driving the Virality of Online Video Content

The statistics reported in the first paragraph were astounding. It is amazing how much our lives revolve around technology and social media today but it is also not surprising that these statistics are reality. I think the question of “why certain pieces of content spread more widely and rapidly than others” is something that does need a great deal of study. This question has major implications for businesses as well as anyone sending a message (Governments, political parties, etc. ) Thinking back to videos I, personally, have shared on Facebook, liked on Youtube, or even watch on TED, I would agree that there is a narrative but the speakers voice, speed, pauses, and intonation on particular words all had an impact also. Certain speakers have the ability to draw you in. Something I would like to know is if we can identify what drives online virality, is it recreatable for anyone (i.e., can this be learned and replicated)? Or is this something that takes a true talent in the area.

I thought the Crash Course video was helpful in it’s coverage of classification trees and rudimentary explanation of the mechanisms of neural networks. I’ve been meaning to ask whether classification problems with so many variables are solved in multidimensional space or if some kind of linear projection of all the variables was created in order to formulate 2 or 3 axes to then divide with boundaries, but this video squashed that for me. I’m eager to learn how to actually begin constructing simple neural networks to get used to how they work.

ReplyDeleteMy favorite part of the tree scrolly site is the knobs that display the bias/variance tradeoff in regards to accuracy. Last week, we talked about whether there was an optimal accuracy to shoot for on a training set to avoid overfitting while still being viable (I think that was posed by John B). I think this illustration shows that it really just depends on the data, and that you really just have to run a few different things and go for what winds up being optimal. Luckily, we can do that with computers relatively quickly (well, the computers can do it quickly, though the set-up might be tricky).

In regards to the Super Bowl ads/EEG study, I think its fascinating that the measure of neural engagement was a better predictor of ad interactions than ad ratings themselves. I’ve worked on a project that sought to compare implicit (i.e. cognitive) and explicit (i.e. self-report) measure of the same constructs, and it seems that the two methodological paradigms often are often unassociated and rarely predict behaviors concordantly. This is not to say that either methodology is superior to any other, but the differences between what we experience neurocognitively and what we say we measure in surveys is pretty astounding. Anyway, I think that an additional moderator that could be explored further in future studies is participants’ expectations regarding the ads. The authors note that more attention is paid to Super Bowl ads than any other ads, and the participants in this study knew that the ads were Super Bowl ads, and therefore were more likely to be engaging, emotional, humorous, etc. To illustrate if you show the same set of ads to one group who know that these were Super Bowl ads and again to another group who are not told the context of the ads (or are told they are regular TV ads from some random channel), do you think there would be differences in either the EEG data, the self-report ad data, and to whatever extent you could measure it, the behavioral engagement data?

This question extends to the second article. I think that viewer expectations might impact the effects of narrativity, prosidy, and even the interactions that the authors constructed. Speaking of the interactions, I had a specific question about the stats presented. It seems that the table and results provided were from a linear regression. I probably have a much more tenuous grasp of the statistics involved both conceptually and those used in the actual calculations, but isn’t it the case that main effects shouldn’t be interpreted when significant interactions are present? The authors note that there were significant main effects of narrativity, prosody, and pace, but also show that these variables have a significant interaction with affective language. Maybe I’m unclear, but in order to make this conclusion, shouldn’t this have been constructed as a hierarchical regression with the main effects in one step and the interactions in another, or include some separate analyses that demonstrates that these main effects hold regardless of the interactions? It could be that the authors did control for this properly, or that their interpretations are perfectly valid regardless, and I’m not used to being pedantic about stats, but that was something that was preached to me in stats that I think I’ll never forget. I’d love to know more about that if anyone can shed any light on it.

PBS and r2d3:

ReplyDeleteThe video mentions weak vs. strong AI. Of course, all current AI is weak. One once heard ambitious calls from within cognitive science to pursue strong AI. Look at this enthusiastic passage from John Haugeland’s 1985 book Artificial Intelligence: The Very Idea:

“And the epitome of the entire drama [viz., the cognitive revolution] is Artificial Intelligence, the exciting new effort to make computers think. The fundamental goal of this research is not merely to mimic intelligence or produce some clever fake. Not at all. ‘AI’ wants only the genuine article: machines with minds, in the full and literal sense” (Haugeland, 1985, p. 2).

Cognitive science has generally backed off from such ambitious claims since then, although of course AGI (Artificial General Intelligence) systems are still being pursued. Do the big data revolution and machine learning techniques like those mentioned in r2d3 change this landscape at all? If strong AI was impossible using the old logic and expert systems, does machine learning change anything today? And, even if it does and we can get amazing AGIs, are we any closer to strong AI in the sense that Haugeland talks about?

Dietz et al. (2016, 2017):

What is the role of the scientist? Is the scientist the one who discovers truths about nature, the one who advances human knowledge and society, the one who struggles within an institution for economic security, or a technician for big business and the military? Is cognitive science, or neuroscience, something that ameliorates human suffering, foments human flourishing, and advances human knowledge, or is it something that sells beer and makes BigDogs (warbots)? Is neuromarketing something that we ought to have?

Machine learning crash course:

ReplyDeleteI really enjoyed the video's visualizations of neural networks, along with the explanation of weak AI and strong AI. These are terms I've heard before, but I had no idea what they meant, and it was helpful to have that clarified. I wonder how many additional dimensions and levels of deep neural networks would be necessary to create a strong AI closer to human intelligence - I'm sure it is much more complex than I can possibly imagine, but it would be so interesting to see.

r2d3 scrolly:

I liked how this example built on the house one from last week. My main question is, how easy would it be to predict the lowest error rate in the bias-variance tradeoff, so you know when you should stop trying to improve the accuracy of your decision tree in order to prevent overfitting the data? Does it make more sense to overfit your data and then go back and remove a few layers of your tree once you know that lower limit of error?

Deitz (2016, 2017):

I thought it was interesting to read these studies that dealt with media (superbowl ads, TED talks) that is popular and is something I am personally familiar with.

In regards to the 2016 article, I've seen a number before about the unbelievably high number of ads that the average American is exposed to on a daily basis - between 4 and 10 thousand ads. I think it is interesting that people will watch the super bowl only to see these particular ads. I'm one of those people, since I don't care about football, and in the past I would only watch the funny ads in between the game. I hadn't previously thought about the marketing implications of that, but it makes sense that $2 billion is spent on those advertising spots each year!

The 2017 study is just as interesting; I can't even estimate how many videos I see every day. I may purposely seek out or intentionally watch a few videos a day, such as the one for this class, but there are countless I am exposed to just from scrolling through social media and seeing what others have shared or what ads have been picked by bots for me to see. Side note, many viral videos are extremely short and have little speech in them, such as vines, which are extremely different from TED or TEDx talks, which are much longer and are focused around the speech that is being communicated. It would be interesting to see what popular vines have in common with each other, opposed to other short videos that do not go viral.

The visual intro to machine learning part 2 was much easier to understand with the graphs and animated visuals. What I got from it is that you must decide how much bias and how much variance to use in your model. Can you make machine learning make better machine learning algorithms or models?

ReplyDeleteThe authors of Deitz 2016 combined self-report measures with EEG measures to produce a model that more accurately predicts how much a voter likes a SuperBowl ad. I would have liked to see ads categorized by type of product, food, beverages, cars, etc. It would also be interesting to look at people who rated ads below or above average consistently to see if their EEGs are different in an interesting way. Honestly, I don't like this type of research as it can be used to undermine consumer choice by designing more deceitful yet effective ads.

Deitz 2017 looked at TED talks and youtube talks and tried to correlate the narrativity of the talk with sharing by viewers. It does not surprise me at all that people share videos with faster speech pace as such people may exude confidence.

Crash Course Machine Learning (Video)

ReplyDeleteThinking about decision trees as a sequence of hierarchical IF/THEN/ELSE statements really helped me for some reason. I know that neural networks are largely “black box” models, but I wonder what the approach would be for categorical inputs. It makes some sense to me how continuous variables could be transformed and strengthened or weakened by weighting, but I wonder how neural networks would perform when the inputs are binary or even multiple categorical levels.

Machine Learning Scrolly Part II

This was a very useful illustration of the bias-variance tradeoff. While I understood this at a conceptual level, seeing it in action (plotted on a scatterplot, and the actual sorting) really helped in understanding. It was also useful to hear these problems represented in a more design-based context, rather than raw mathematics. That is, they stated that stumps lead to BIAS which results in CONSISTENT errors in classification, while too many branches lead to overfitting due to VARIANCE, leading to INCONSISTENT errors from idiosyncratic patterns in the data. It seems conceptually to be the same restraints that govern specialization in AIs. For example, there are many systems which do something well (e.g., chess), but do quite poor at everything else. Would a more general AI (like Pei Wang’s NARS) be likely to produce more biased, but consistently erroneous responses then?

Dietz (2016)

It is very difficult for me to get out of methodological critique mode. There were a few things that I was not too fond of beyond the oversimplification of un/conscious decision making. For example, they mention “control variables” that contribute to alternate explanations (e.g., number of subscribers, brand familiarity), but then include them all in their regression model—they did not “control” for them, they added them. I also skimmed one of the articles cited often in the paper (Penn, 2006) and realize that they should really talk to someone in psychology before inventing their own theories of memory.

My methodological beef aside, there is a larger, slightly less curmudgeony question I’d raise: does sharing of these commercials have a meaningful relationship with actual consumer behavior? It is great that this measure of “neuroengagement” slightly improved their model, but does it really have real world consumer implications?

Dietz (2017)

This article was interesting in its use of LIWK and Coh-Metrix to analyze TED talks. I was thrown off with the reporting of the regression here, too, but it seems that narrativity did contribute quite a bit to the results. Based on the literature on the effect of interest in learning, there is some overlap with this, where more narrative and coherent texts tend to lead to more interest, as do specific “seductive” topics (e.g., sex, violence), though the latter may lead to problems in learning. I also would have liked to see some measure of topic, too. That is, the more emotional and narrative stories might fall into certain categories that the prototypical TED audience—which I see as well-educated, but not necessarily scientifically-minded across the board—may prefer sharing. It makes me very curious about Coh-Metrix and LIWK, as well. I recently tried reading up on LSA and believe I have the gist, but I’m curious how these other methods vary (e.g., in how they represent the features they measure).

P.S. The illustrations and descriptions provided for boosting were very interesting and informative. It makes a lot more sense seeing it graphically, step-by-step

Machine Learning & A.I. - Crash Course

ReplyDeleteThe first interesting concept is the support vector machines. It can use polynomial functions to provide decision boundaries. What are the advantages and disadvantages of decision trees and support vector machines?

Model Tuning and the Bias-Variance Trade off

From this section, I learned that if the model is too simple it will have more bias and if it is too complex it will have more errors. While the problem is what methods can we use to balance them? And, I am wondering when splitting the data, the order of the predictors matters or not.

Deitz2016

This is an interesting paper that compares the effectiveness between EEG-based measures and ratings. I think the problem is that when using EEG participants need to wear the electrode cap in the lab condition which may influence their attention and emotion. And the raters were another group of U.S. citizens which make me confused whether the measures can be compared.

Deitz2017

There are 7 hypotheses in this paper with some moderate effect hypotheses. I think it is better if the author add some figures showing the moderate effects. The writer used two text analysis tools Coh-Metrix and LIWC. What are their differences and how do they measure certain aspect of the text?

The machine learning crash course video was helpful in breaking down the basics of what we have been talking about. I appreciated the detailed breakdown of the "artificial neuron," using the familiar terms associated with neurons to explain how it works. I can't even imagine how complex a Strong AI that could compete with human intelligence would be, and I also can't decide if that would be more impressive or more terrifying that it could exist.

ReplyDeleteThe scrolly again was a great visual tool, and I like how they break down each step of their process into simple parts to make it easier to understand. My main question from this scrolly is how to know what the ideal minimum error is, and if there is a way to find that ideal number or if it is just a process of trial and error.

Deitz 2016 was very interesting because, while I have studied advertising and its effects in the past, I have never considered the complex statistical methods needed to process the data involved in those studies. While advertising can be beneficial in some ways, and the study of it is very interesting, it is also slightly terrifying knowing how much power advertisers could have as they continue to research the link between neuroscience and real world behavior.

Deitz 2017 interested me because I had never really considered what would make a video "go viral," though the factors they mentioned did make sense as ones that would be highly influential. I would wonder if the length of the video would also have an effect, as a previous commenter mentioned that many videos that go viral today are shorter in nature, such as vines. It is said often that attention spans are growing shorter because of the massive amount of information we are constantly exposed to and the ease of access through smart phones, etc, so I wonder if the length of videos that are otherwise comparably the same would have any effect.

I find videos such as the crash course one entertaining and useful for beginning knowledge in a domain, but I do wish it went more in depth about details of neural networks. I think bringing up the difference between weak AI and general AI is important. Although, maybe it was a question of video length, but I think it should’ve touched on Alpha Zero rather than just AlphaGo. While it’s very far from actual AGI, it is a more general system than many we’ve had since it doesn’t just play Go. Alpha Zero can teach itself any complete knowledge game from scratch and is able to figure out not just the rules to the game, but teach itself different strategies than humans might use to play the games. So, while it’s still just a game playing AI, it is much more general than any game playing systems in the past.

ReplyDeleteIn the visual intro to machine learning part 2, I found it particularly useful when it discussed minimum node size. That was actually a technique I was entirely unaware of, but it makes perfect sense as a way to decrease bias. What I find most interesting about many of these machine learning methods in both their technique and what they’re trying to do is that achieving equilibrium seems to be a central component. While some of these systems are not too similar to biological ones, I find this pattern of efficient systems seeking equilibrium to be a fascinating one.

For the Deitz 2016 article, I really liked the focus on implicit processing of ad engagement. I understand how traditional measures like surveys for opinions and attitudes can be useful. However, from a psychology standpoint, advertising plays heavily on unconscious processes, so a straightforward, explicit measure does seem to be missing an essential component. Given what we know about the psychology of advertising, I’d say this is a well needed step to getting to the heart of what certain ads are doing. At the same time, as with social psychology in the past, I do think that this kind of research has potential for abuse. I agree with Zach’s questioning. The article seems to take a very neutral stance on this by not addressing these problems. It seems concerned with the usefulness of what can be gleaned from neuromarketing research and especially because knowledge acquisition in most domains is an amoral enterprise, it is always important to ask ourselves what certain knowledge is being used for. With the severe financial implications of understanding how advertising affects people, I find myself worrying if the knowledge gained from neuromarketing research will be utilized in a surgical way simply to maximize profits or if there are indeed some that would apply this kind of understanding ethically.

As I said in the section on the 2016 article, implicit processing has great influence on what we react to and how we react to it. Because of this, I do think understanding what exactly the features of media that captivate us are is an important endeavor. While I still share certain concerns about how this knowledge can be used to influence people, understanding the kinds of verbal and prosodic features explored in this study certainly could also be used to bring into consciousness those processes. This kind of understanding could also help people to bulwark against unconscious influence. This is essential to being able to judge media on its actual content rather than being emotionally captivated. It also allows those with good messages to learn ways to make their message more powerful, but again, this is something that must be tempered with care.

Deitz, et al 2016 provide the scientific rationale on how the subconscious mind influence the emotions during the Super Bowl ads. I found fascinating that EEGs detects likes and dislikes. Dietz 2017 analysed TED talks using complex Coh-Matrix and LIWC to determine virality.

ReplyDeleteThe Machine Learning and AI video encompasses the explanation of machine learning with a good balance of simplicity and complexity to understand the relation to AI—both big an small. There were lots of definitions and review, some good to be reminded of, and I appreciated the connections mentioned. Breaking down concepts was helpful. It did make me wonder how complex (up to how many different variables) is considered outrageous for even machine learning? Additionally the r2d3 demonstration once again, did a great job of visualizing the concept of bias-variance trade-off. Piggy backing off of what John commented about running different things until it winds up being fairly optimal, is there an unofficial error rate that a model aims to reach when adjusting the complexity/simplicity of the model?

ReplyDeleteDietz et al. (2016, 2017)

Both of these articles posed very interesting questions. With the Superbowl ad experiment, I wonder if the intensity of the reactions of the viewers was correlated at all to their response to the Superbowl in general. That is—did their neurological response to the game itself influence their attention/response to the subsequent commercials. I think this was mentioned earlier, but I would be interested to see if the same results were produced given that the commercials were shown during a regular (but popular to the majority) television show.

In the discussion section of the Narrativity and Prosodic Features article, the authors mention future work focused on “the effects of discrete emotions such as anger, sadness, and fear” (Dietz, 2016). As I was reading through the article this posed the continuous question in my head—would the same results be rendered if an emotion was negative in comparison to what was tested in this particular study?

The content on the webpage is very good, so I can better understand the process of boosting, and what method can be used to better fit the data. However, one problem is that when we pursue data fitting, we still notice the generalization of the data model. How to maintain the balance between the two?

ReplyDeleteDeitz, 2016

Using social media data to predict individual behavior is becoming more common. For example, many studies focus on how to use social media data to analyze an individual's personality. In fact, in order to train the model, the researchers still have to artificially give the label. In this sense, it is still necessary to further objectify the model.

Deitz, 2017

This study analyzes what Narrativity and Prosodic Features can promote the dissemination of video and provides a theoretical and empirical basis for in-depth practice. I think it is very practical.